AIって結局何なのかよく分からないので、とりあえず100日間勉強してみた Day60

経緯についてはこちらをご参照ください。

■本日の進捗

- 誤差逆伝播法を理解

■はじめに

今回も「ゼロから作るDeep Learning Pythonで学ぶディープラーニングの理論と実装(オライリー・ジャパン)」で、深層学習を学んでいきます。

前回までニューラルネットワークで用いる主な層から、ReLU層、Sigmoid層、全結合層、Softmax層を順伝播と逆伝播を使える形で実装してきました。今回はこれらを用いて実際にニューラルネットワークに組み込んでみたいと思います。

■誤差逆伝播法

誤差逆伝播法(backpropagation)とは、ニューラルネットワークが重みを学習するためのアルゴリズムで、順伝播による出力に対して誤差を求め、その誤差を出力層から入力層へ向かって逆伝播させることで、ニューラルネットワーク上のパラメータを更新します。

早速、ニューラルネットワークに誤差逆伝播法を実装してみます。

import sys

import numpy as np

import matplotlib.pyplot as plt

sys.path.append("./")

from work.mnist import load_mnist

class ReLU:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = np.maximum(0, x)

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.dW = None

self.db = None

def forward(self, x):

self.x = x

out = np.dot(x, self.W) + self.b

return out

def backward(self, dout):

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = np.dot(dout, self.W.T)

return dx

class SoftmaxCrossEntropy:

def __init__(self):

self.output = None

self.grad_input = None

def forward(self, logits):

exp_values = np.exp(logits - np.max(logits, axis=1, keepdims=True))

self.output = exp_values / np.sum(exp_values, axis=1, keepdims=True)

return self.output

def backward(self, y_true):

self.grad_input = self.output - y_true

return self.grad_input

def cross_entropy_error(y, t):

delta = 1e-7

return -np.sum(t * np.log(y + delta)) / y.shape[0]

class NeuralNetwork:

def __init__(self, input_size, hidden_size, output_size, weight_init_std=0.01):

self.params = {}

self.params['W1'] = weight_init_std * np.random.randn(input_size, hidden_size)

self.params['b1'] = np.zeros(hidden_size)

self.params['W2'] = weight_init_std * np.random.randn(hidden_size, hidden_size)

self.params['b2'] = np.zeros(hidden_size)

self.params['W3'] = weight_init_std * np.random.randn(hidden_size, output_size)

self.params['b3'] = np.zeros(output_size)

self.layers = [

Affine(self.params['W1'], self.params['b1']),

ReLU(),

Affine(self.params['W2'], self.params['b2']),

ReLU(),

Affine(self.params['W3'], self.params['b3'])

]

self.loss_layer = SoftmaxCrossEntropy()

def forward(self, x):

for layer in self.layers:

x = layer.forward(x)

return x

def compute_loss(self, x, y_true):

logits = self.forward(x)

loss = cross_entropy_error(self.loss_layer.forward(logits), y_true)

return loss

def backward(self, y_true):

dout = self.loss_layer.backward(y_true)

for layer in reversed(self.layers):

dout = layer.backward(dout)

def update(self, lr):

for layer in self.layers:

if isinstance(layer, Affine):

layer.W -= lr * layer.dW

layer.b -= lr * layer.db

(X_train, y_train), (X_test, y_test) = load_mnist(normalize=True, one_hot_label=True)

train_size = X_train.shape[0]

batch_size = 10

np.random.seed(8)

batch_mask = np.random.choice(train_size, batch_size)

X_batch = X_train[batch_mask]

y_batch = y_train[batch_mask]

lr = 0.01

step_num = 1000

input_size = 784

hidden_size = 200

output_size = 10

network = NeuralNetwork(input_size, hidden_size, output_size)

loss_history = []

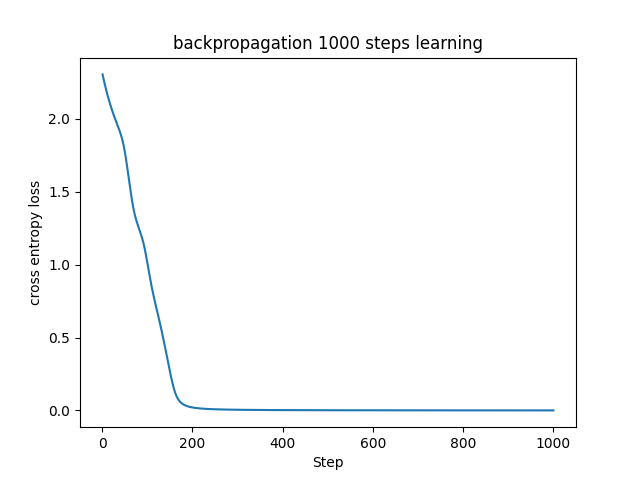

for i in range(step_num):

loss = network.compute_loss(X_batch, y_batch)

loss_history.append(loss)

network.backward(y_batch)

network.update(lr)

print(f"Step {i+1}, Loss: {loss}")

plt.plot(range(1, step_num + 1), loss_history)

plt.xlabel("Step")

plt.ylabel("cross entropy loss")

plt.title("backpropagation 1000 steps learning")

plt.show()

各層の逆伝播自体はこれまで構築してきたものです。

ここでは新たにNeuralNetworkクラスを構築して、誤差逆伝播法の流れそのものを記述しています。まずは初期化(__init__)としてニューラルネットワークで用いる層を定義しています。このように事前に構築しておいた層を好きなだけ書き足していけば好きな組み合わせで何層でも深い層の(複雑な)モデルを作れることがニューラルネットワークの素晴らしさです。次に順伝播(forward)を行い、その損失を計算(compute_loss)します。最後に逆伝播(backward)を実行してからパラメータを更新(update)しています。

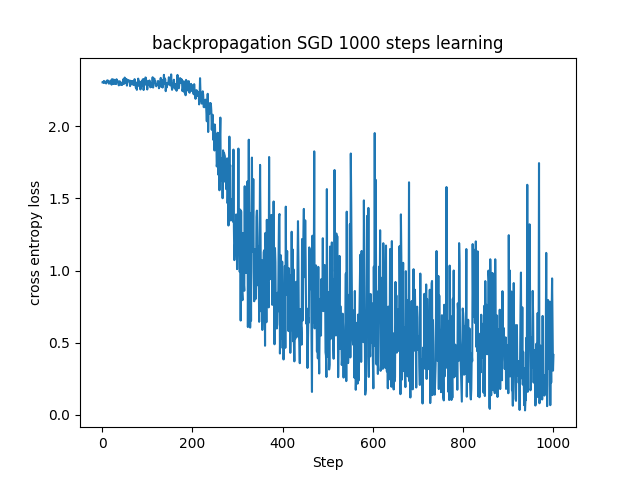

誤差逆伝播法においてもミニバッチ学習に確率的勾配降下法(SGD)が用いることができます。

import sys

import numpy as np

import matplotlib.pyplot as plt

sys.path.append("./")

from work.mnist import load_mnist

class ReLU:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = np.maximum(0, x)

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.dW = None

self.db = None

def forward(self, x):

self.x = x

out = np.dot(x, self.W) + self.b

return out

def backward(self, dout):

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = np.dot(dout, self.W.T)

return dx

class SoftmaxCrossEntropy:

def __init__(self):

self.output = None

self.grad_input = None

def forward(self, logits):

exp_values = np.exp(logits - np.max(logits, axis=1, keepdims=True))

self.output = exp_values / np.sum(exp_values, axis=1, keepdims=True)

return self.output

def backward(self, y_true):

self.grad_input = self.output - y_true

return self.grad_input

def cross_entropy_error(y, t):

delta = 1e-7

return -np.sum(t * np.log(y + delta)) / y.shape[0]

class NeuralNetwork:

def __init__(self, input_size, hidden_size, output_size, weight_init_std=0.01):

self.params = {}

self.params['W1'] = weight_init_std * np.random.randn(input_size, hidden_size)

self.params['b1'] = np.zeros(hidden_size)

self.params['W2'] = weight_init_std * np.random.randn(hidden_size, hidden_size)

self.params['b2'] = np.zeros(hidden_size)

self.params['W3'] = weight_init_std * np.random.randn(hidden_size, output_size)

self.params['b3'] = np.zeros(output_size)

self.layers = [

Affine(self.params['W1'], self.params['b1']),

ReLU(),

Affine(self.params['W2'], self.params['b2']),

ReLU(),

Affine(self.params['W3'], self.params['b3'])

]

self.loss_layer = SoftmaxCrossEntropy()

def forward(self, x):

for layer in self.layers:

x = layer.forward(x)

return x

def compute_loss(self, x, y_true):

logits = self.forward(x)

loss = cross_entropy_error(self.loss_layer.forward(logits), y_true)

return loss

def backward(self, y_true):

dout = self.loss_layer.backward(y_true)

for layer in reversed(self.layers):

dout = layer.backward(dout)

def update(self, lr):

for layer in self.layers:

if isinstance(layer, Affine):

layer.W -= lr * layer.dW

layer.b -= lr * layer.db

(X_train, y_train), (X_test, y_test) = load_mnist(normalize=True, one_hot_label=True)

train_size = X_train.shape[0]

batch_size = 10

np.random.seed(8)

lr = 0.01

step_num = 1000

input_size = 784

hidden_size = 200

output_size = 10

network = NeuralNetwork(input_size, hidden_size, output_size)

loss_history = []

for i in range(step_num):

batch_mask = np.random.choice(train_size, batch_size)

X_batch = X_train[batch_mask]

y_batch = y_train[batch_mask]

loss = network.compute_loss(X_batch, y_batch)

loss_history.append(loss)

network.backward(y_batch)

network.update(lr)

print(f"Step {i+1}, Loss: {loss}")

plt.plot(range(1, step_num + 1), loss_history)

plt.xlabel("Step")

plt.ylabel("cross entropy loss")

plt.title("backpropagation SGD 1000 steps learning")

plt.show()

やはり振動が激しいのでバッチサイズを上げてみます。

import sys

import numpy as np

import matplotlib.pyplot as plt

sys.path.append("./")

from work.mnist import load_mnist

class ReLU:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = np.maximum(0, x)

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.dW = None

self.db = None

def forward(self, x):

self.x = x

out = np.dot(x, self.W) + self.b

return out

def backward(self, dout):

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = np.dot(dout, self.W.T)

return dx

class SoftmaxCrossEntropy:

def __init__(self):

self.output = None

self.grad_input = None

def forward(self, logits):

exp_values = np.exp(logits - np.max(logits, axis=1, keepdims=True))

self.output = exp_values / np.sum(exp_values, axis=1, keepdims=True)

return self.output

def backward(self, y_true):

self.grad_input = self.output - y_true

return self.grad_input

def cross_entropy_error(y, t):

delta = 1e-7

return -np.sum(t * np.log(y + delta)) / y.shape[0]

class NeuralNetwork:

def __init__(self, input_size, hidden_size, output_size, weight_init_std=0.01):

self.params = {}

self.params['W1'] = weight_init_std * np.random.randn(input_size, hidden_size)

self.params['b1'] = np.zeros(hidden_size)

self.params['W2'] = weight_init_std * np.random.randn(hidden_size, hidden_size)

self.params['b2'] = np.zeros(hidden_size)

self.params['W3'] = weight_init_std * np.random.randn(hidden_size, output_size)

self.params['b3'] = np.zeros(output_size)

self.layers = [

Affine(self.params['W1'], self.params['b1']),

ReLU(),

Affine(self.params['W2'], self.params['b2']),

ReLU(),

Affine(self.params['W3'], self.params['b3'])

]

self.loss_layer = SoftmaxCrossEntropy()

def forward(self, x):

for layer in self.layers:

x = layer.forward(x)

return x

def compute_loss(self, x, y_true):

logits = self.forward(x)

loss = cross_entropy_error(self.loss_layer.forward(logits), y_true)

return loss

def backward(self, y_true):

dout = self.loss_layer.backward(y_true)

for layer in reversed(self.layers):

dout = layer.backward(dout)

def update(self, lr):

for layer in self.layers:

if isinstance(layer, Affine):

layer.W -= lr * layer.dW

layer.b -= lr * layer.db

(X_train, y_train), (X_test, y_test) = load_mnist(normalize=True, one_hot_label=True)

train_size = X_train.shape[0]

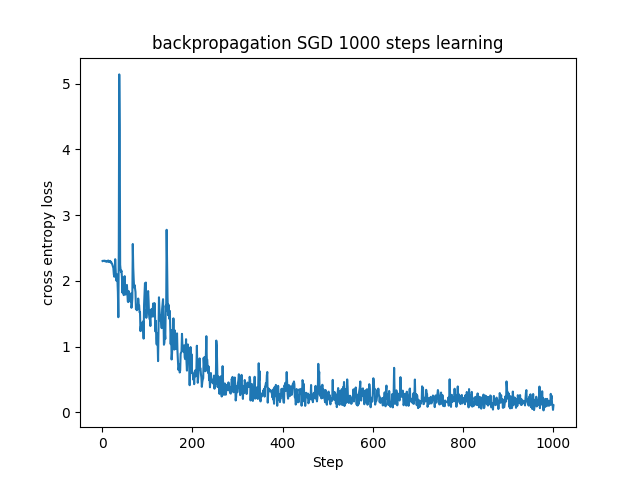

batch_size = 100

np.random.seed(8)

lr = 0.01

step_num = 1000

input_size = 784

hidden_size = 200

output_size = 10

network = NeuralNetwork(input_size, hidden_size, output_size)

loss_history = []

for i in range(step_num):

batch_mask = np.random.choice(train_size, batch_size)

X_batch = X_train[batch_mask]

y_batch = y_train[batch_mask]

loss = network.compute_loss(X_batch, y_batch)

loss_history.append(loss)

network.backward(y_batch)

network.update(lr)

print(f"Step {i+1}, Loss: {loss}")

plt.plot(range(1, step_num + 1), loss_history)

plt.xlabel("Step")

plt.ylabel("cross entropy loss")

plt.title("backpropagation SGD 1000 steps learning")

plt.show()

バッチサイズを10倍にしてみたら学習に用いるデータの乖離が減少して学習効率が良くなっています。

■おわりに

今回はこれまで構築してきた(主に数式をこねくり回してきた)各層をニューラルネットワークに実装して、誤差逆伝播法を組み込んでみました。

一度作った層をどこにいくつ置くかは任意で、プログラム上もその変更が容易なことが素晴らしいです。ぜひお好きな層数のニューラルネットワークを作って遊んでみてください。計算も結構高速ですよ!

■参考文献

- Andreas C. Muller, Sarah Guido. Pythonではじめる機械学習. 中田 秀基 訳. オライリー・ジャパン. 2017. 392p.

- 斎藤 康毅. ゼロから作るDeep Learning Pythonで学ぶディープラーニングの理論と実装. オライリー・ジャパン. 2016. 320p.

- ChatGPT. 4o mini. OpenAI. 2024. https://chatgpt.com/

- API Reference. scikit-learn.org. https://scikit-learn.org/stable/api/index.html